1. Overview

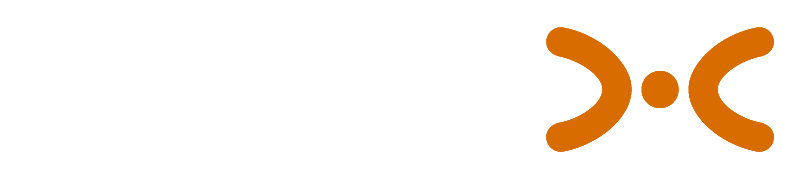

Biodiversity is declining at unprecedented rates due to climate change, pollution, deforestation, and human activity. Traditional monitoring methods—field surveys, manual species identification, and sporadic sampling—are resource-intensive and often lack real-time capability. This project proposes the development of an AI-powered environmental and biodiversity monitoring system that integrates sensor networks, remote sensing, and machine learning to continuously track ecosystem health and detect critical changes in biodiversity.

By combining satellite imagery, acoustic monitoring, drone data, and environmental sensors with AI-driven analytics, the system aims to deliver actionable insights for conservationists, policymakers, and local communities.

2. Problem Statement

Conservation biology faces three major challenges:

- Fragmented data collection across ecosystems.

- Slow detection of biodiversity loss, often only noticed after significant damage.

- Limited capacity to analyze multi-modal data (satellite, acoustic, genetic).

An integrated, scalable AI-driven platform is required to bridge these gaps, enabling real-time monitoring and early detection of environmental threats.

3. Objectives

- Develop an AI-based platform to process and analyze environmental and biodiversity data.

- Integrate multi-source data: remote sensing, in-situ sensors, acoustic signals, and camera traps.

- Detect and classify species, track populations, and monitor habitat conditions.

- Provide early-warning alerts for biodiversity threats (deforestation, pollution, invasive species).

- Enable open-access dashboards for governments, NGOs, and researchers.

4. Research Questions

- Can AI models trained on multi-modal data improve biodiversity monitoring compared to traditional methods?

- How effective are acoustic and image recognition models for species detection in noisy, complex ecosystems?

- Can real-time monitoring predict biodiversity loss before it becomes irreversible?

- How can open, explainable AI improve trust and adoption in environmental decision-making?

5. Methodology

5.1 Data Sources

- Remote Sensing: NASA Landsat, ESA Sentinel, MODIS.

- Field Sensors: IoT devices measuring temperature, humidity, air/water quality.

- Acoustic Monitoring: BirdNET, Rainforest Connection datasets.

- Camera Traps & Drones: Global Biodiversity Information Facility (GBIF), Wildlife Insights.

- Citizen Science: iNaturalist, eBird.

5.2 Data Processing

- Image preprocessing: segmentation, object detection for species.

- Acoustic signal cleaning and spectrogram conversion.

- Environmental data normalization.

- Data fusion for multi-modal learning.

5.3 AI Models

- Computer Vision: CNNs (YOLOv8, EfficientNet) for species identification in images/videos.

- Acoustic Models: Deep CNNs + spectrogram-based classification for bird, amphibian, and insect calls.

- Predictive Models: Random Forests, LSTMs for forecasting biodiversity trends.

- Graph Neural Networks (GNNs) for ecosystem interaction modeling.

6. Technical Approach

- Backend: Cloud-based infrastructure (AWS/GCP) for ingestion and processing.

- Data Lake: Storage for heterogeneous datasets (satellite, audio, sensors).

- AI Pipeline: Python (PyTorch, TensorFlow), Hugging Face for NLP (ecological text data).

- Frontend: Web-based dashboard with maps, alerts, and trend visualizations.

7. Expected Outcomes

- A real-time monitoring platform for biodiversity and environmental health.

- Species recognition models validated on diverse ecosystems.

- Interactive dashboard for conservation planning and decision-making.

- Early warning system for ecosystem degradation.

8. Innovation

- Multi-modal integration: remote sensing + in-situ sensors + citizen science.

- AI-driven biodiversity detection at species, population, and ecosystem levels.

- Explainable AI (XAI) for transparent ecological predictions.

- Scalable design applicable to global and local ecosystems.

9. Implementation Roadmap

- Phase 1 (0–6 months): Data integration, baseline models for image and acoustic detection.

- Phase 2 (6–12 months): Multi-modal AI integration, real-time monitoring prototype.

- Phase 3 (12–18 months): Large-scale deployment, dashboards, partnerships with NGOs and governments.

10. Use Cases

- Deforestation Monitoring – Detect illegal logging from satellite images.

- Wildlife Conservation – Track endangered species populations.

- Pollution Tracking – Monitor air and water quality with IoT sensors.

- Climate Change Research – Assess long-term biodiversity shifts.

- Community Engagement – Empower citizens to contribute biodiversity data.

11. Potential Challenges

- Data scarcity for rare species.

- High variability in environmental acoustic data.

- Cloud infrastructure costs for large-scale monitoring.

- Integration of heterogeneous datasets in real time.

12. Ethical Considerations

- Ensure that biodiversity data is not exploited for illegal wildlife trade.

- Protect privacy of local communities contributing data.

- Promote open-access principles while respecting indigenous knowledge.

- Transparency of AI models to avoid misclassification bias.

13. Resources Required

- Hardware: IoT sensors, drones, acoustic recorders, satellite access.

- Software: AI frameworks (PyTorch, TensorFlow), GIS tools (QGIS, Google Earth Engine).

- Infrastructure: Cloud servers with GPU acceleration.

- Human Resources: Ecologists, AI engineers, data scientists, software developers.

14. Team Composition

- Project Lead: Expert in biodiversity and conservation.

- AI Specialists: Computer vision, acoustic modeling, predictive analytics.

- Ecologists & Field Biologists: Validation and domain knowledge.

- Developers: Backend, frontend, and data pipeline engineers.

- Stakeholder Liaisons: NGOs, government agencies, local communities.

15. Timeline

- 0–6 months: Data ingestion + baseline AI models.

- 7–12 months: Integration of multi-modal AI, pilot deployments.

- 13–18 months: Full platform with dashboards + large-scale field trials.

16. Budget Estimate

- Personnel: $300,000.

- Equipment (sensors, drones, cameras): $150,000.

- Cloud Infrastructure: $80,000.

- Field Operations & Partnerships: $70,000.

- Total Estimated Budget: $600,000.

17. Expected Impact

- Empower governments and NGOs to detect biodiversity threats early.

- Enable data-driven conservation policies.

- Foster community engagement in biodiversity monitoring.

- Provide global open-access biodiversity insights to accelerate research.

18. References & Data Sources

- NASA Landsat, ESA Sentinel, MODIS.

- GBIF, Wildlife Insights, iNaturalist, eBird.

- Rainforest Connection, BirdNET.

- ENVI, Google Earth Engine.

- Foundational literature in conservation AI (2019–2025).